Accurate information forms the foundation of sound analysis and reliable planning. Organizations depend on clean and precise web data to support reporting, forecasting, and operational insight. When collected information contains errors or gaps, outcomes become uncertain and decisions weaken.

Intelligent extraction architectures address this challenge by applying structured logic and adaptive processes that improve precision at every stage of collection.

Modern extraction systems focus on quality rather than volume. They prioritize relevance, validation, and consistency while reducing noise from unreliable sources. By aligning extraction methods with intelligent design, teams gain stronger confidence in their datasets.

This approach supports clarity, reduces correction efforts, and enables dependable use of collected information across multiple analytical needs.

Table of Contents

ToggleHow Does Intelligent Web Extraction Architecture Enhance Accuracy?

Intelligent web extraction architectures combine automation with decision logic to enhance accuracy. These systems observe content structure, response behavior, and data patterns to guide collection actions. Such awareness reduces misreads and incomplete captures.

Within these architectures, controlled browsing environments are often applied to interpret complex page behavior. Thoughtfully configured tools like scraping browser solutions support structured rendering and interaction handling, allowing systems to capture accurate values while maintaining consistency.

This layered design ensures extraction processes remain stable and focused on quality outcomes.

Key Drivers of Precision in Modern Data Extraction Systems

Data accuracy improves when extraction systems address common error sources proactively. Intelligent architectures focus on prevention rather than correction. The following factors highlight how precision is strengthened through structured design.

- Structured Parsing Rules: Reduce misinterpretation of content elements during extraction.

- Context Awareness: Improves selection of relevant info while ignoring unrelated page components.

- Response Validation: Checks confirm successful retrieval before storing values.

- Duplicate Detection: Prevents repeated entries from reducing dataset reliability.

- Adaptive Logic: Adjusts extraction paths when content structure changes unexpectedly.

The Role of Intelligent Parsing in Maintaining Data Integrity

Parsing plays a critical role in determining data correctness. Intelligent parsing methods analyze structure, labels, and patterns before capturing values. This analysis helps distinguish meaningful information from decorative or irrelevant elements.

Advanced parsing logic also supports adaptability. When page layouts shift, intelligent systems adjust selectors rather than failing silently. This adaptability reduces broken datasets and supports long term accuracy across repeated extraction cycles.

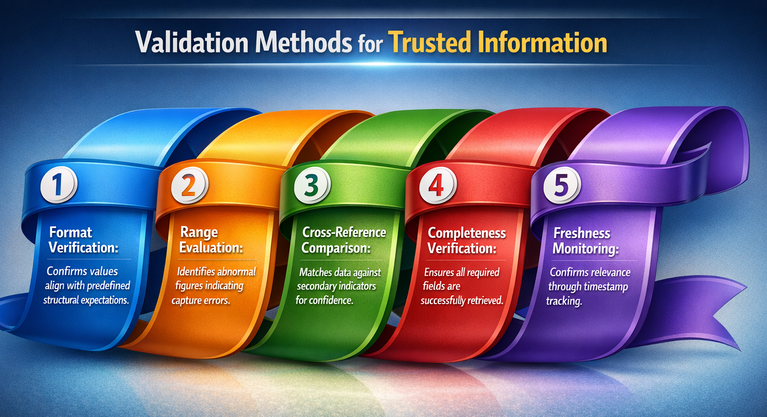

Implementing Robust Validation Methods for Trusted Information

Validation ensures that collected data matches expected formats and logical ranges. Intelligent architectures embed checks that verify accuracy during and after extraction.

- Format Verification: Confirms values align with predefined structural expectations.

- Range Evaluation: Identifies abnormal figures indicating capture errors.

- Cross-Reference Comparison: Matches data against secondary indicators for confidence.

- Completeness Verification: Ensures all required fields are successfully retrieved.

- Freshness Monitoring: Confirms relevance through timestamp tracking.

Strategies for Error Reduction and Continuous Troubleshooting

Reducing errors requires continuous observation and adjustment. Intelligent systems track failure points and refine logic accordingly.

- Logging Mechanisms: Record behavior to support targeted troubleshooting.

- Retry Logic: Handles temporary access issues without storing incomplete data.

- Adaptive Delays: Reduce missed content caused by loading variations.

- Exception Handling: Prevents partial failures from contaminating datasets.

- Continuous Testing: Reveals weaknesses before large-scale extraction runs.

Enhancing System Adaptability in Dynamic Web Environments

Web content changes frequently, making adaptability essential for accuracy. Intelligent architectures monitor structure variations and adjust extraction paths automatically. This responsiveness reduces manual updates and downtime.

Adaptable systems also support scalability. As data needs expand, extraction logic extends without sacrificing precision. This balance ensures accuracy remains consistent even as volume and complexity increase.

Continuous Quality Monitoring Throughout the Extraction Lifecycle

Ongoing monitoring protects data integrity throughout the extraction lifecycle. Intelligent architectures include feedback loops that measure quality indicators regularly.

- Accuracy Scoring: Highlights trends in data reliability across collection cycles.

- Drift Detection: Identifies gradual changes affecting extraction precision.

- Performance Metrics: Reveal timing issues impacting content capture.

- Sample Reviews: Provide human verification for automated assessments.

- Alert Systems: Notify teams when accuracy thresholds fall below accepted levels.

Conclusion: Achieving Reliable Outcomes with Advanced Automation

Accurate information permits dependable insight and confident selection making. Intelligent web extraction architectures deliver this accuracy through dependent layout, adaptive common sense, and non-stop validation. By specializing in prevention, tracking, and refinement, those systems reduce uncertainty and guide correction efforts.

When advanced automation equipment, which includes undetected playwright, are applied thoughtfully inside those architectures, extraction approaches keep accuracy even as managing complicated content material behavior.

This integrated approach gives a realistic answer for corporations seeking reliable information foundations and constant analytical consequences.